Craigslist listcrawler tools offer a powerful way to access and analyze data from Craigslist, a vast online classifieds platform. These tools automate the process of extracting information from numerous listings, providing users with a structured dataset for various purposes. However, this seemingly simple process involves a complex interplay of web scraping techniques, legal considerations, and ethical responsibilities. Understanding these aspects is crucial for anyone considering using a Craigslist listcrawler.

This article delves into the functionality of Craigslist listcrawlers, exploring their technical aspects, legal and ethical implications, and potential applications. We’ll examine the different types of data extractable, discuss best practices for responsible scraping, and analyze the potential risks and rewards associated with this technology. We also explore alternative methods for data acquisition and address common security and privacy concerns.

Craigslist Listcrawlers: Functionality, Legalities, and Applications

Craigslist, a popular online classifieds platform, presents a rich source of data for researchers, businesses, and individuals. Listcrawlers, automated programs designed to extract data from Craigslist, offer a way to access and analyze this information efficiently. However, their use involves significant legal, ethical, and technical considerations. This article explores the functionality, legal and ethical implications, technical aspects, data analysis applications, security concerns, and alternatives to Craigslist listcrawlers.

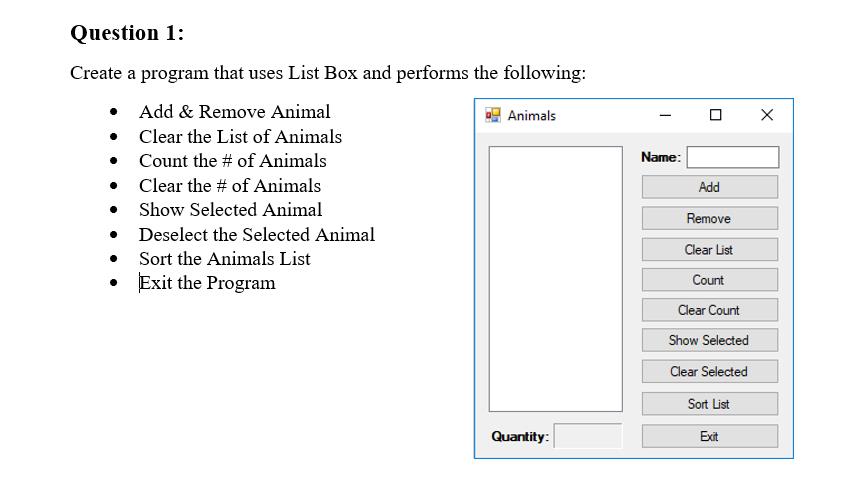

Craigslist Listcrawler Functionality

A Craigslist listcrawler is a software program that automatically retrieves data from Craigslist postings. It achieves this by mimicking the actions of a human user browsing the website. The crawler interacts with Craigslist’s website structure by following hyperlinks, parsing HTML, and extracting relevant information. It can gather various data points, including titles, descriptions, prices, locations, images (metadata), and contact information.

Pagination and search result handling are crucial aspects; the crawler must navigate through multiple pages of results and filter data based on specified search criteria.

For example, a listcrawler searching for used cars in a specific city would need to parse search results, follow links to individual listings, extract details such as make, model, year, price, and mileage, and handle pagination to retrieve data across multiple pages of search results. The crawler might also identify and extract geographic coordinates from the listing’s description or map integration.

| Pros | Cons |

|---|---|

| Automated data collection, saving significant time and effort. | Can be complex to develop and maintain. |

| Large-scale data acquisition, enabling comprehensive analysis. | Potential for legal and ethical violations. |

| Access to real-time data, providing up-to-date market insights. | Risk of overloading Craigslist servers, leading to IP bans. |

| Enables efficient price comparison and competitor analysis. | Requires technical expertise in programming and web scraping. |

Legal and Ethical Considerations

Scraping data from Craigslist raises legal and ethical concerns. Violating Craigslist’s terms of service, which typically prohibit automated scraping, can lead to legal action. Ethical considerations include respecting user privacy and avoiding data misuse. Overloading Craigslist servers through excessive scraping is also unethical and disruptive.

A hypothetical scenario: A listcrawler designed to collect personal contact information from Craigslist postings without user consent could violate privacy laws and lead to legal repercussions. Best practices include respecting robots.txt, implementing rate limiting to avoid server overload, and anonymizing data to protect user privacy.

Technical Aspects of Development

Building a Craigslist listcrawler typically involves programming languages like Python, often utilizing libraries such as Beautiful Soup and Scrapy. These libraries simplify the process of parsing HTML and extracting data. A robust crawler includes error handling mechanisms to manage issues like network errors, unexpected HTML changes, and data inconsistencies. Data is usually stored in databases like SQLite, PostgreSQL, or MongoDB, depending on the scale and complexity of the project.

Implementing basic error handling involves using try-except blocks to catch exceptions during the scraping process. For example, if a network error occurs while fetching a webpage, the crawler can retry the request after a delay, log the error, or skip the problematic URL. This ensures the crawler’s resilience and prevents it from crashing due to minor errors.

Further details about chris sheridan related to taylor sheridan is accessible to provide you additional insights.

- Identify potential error points in the scraping process.

- Use try-except blocks to catch specific exceptions.

- Implement retry mechanisms for transient errors (e.g., network issues).

- Log errors for debugging and monitoring.

- Handle unexpected data formats gracefully.

Data Analysis and Applications

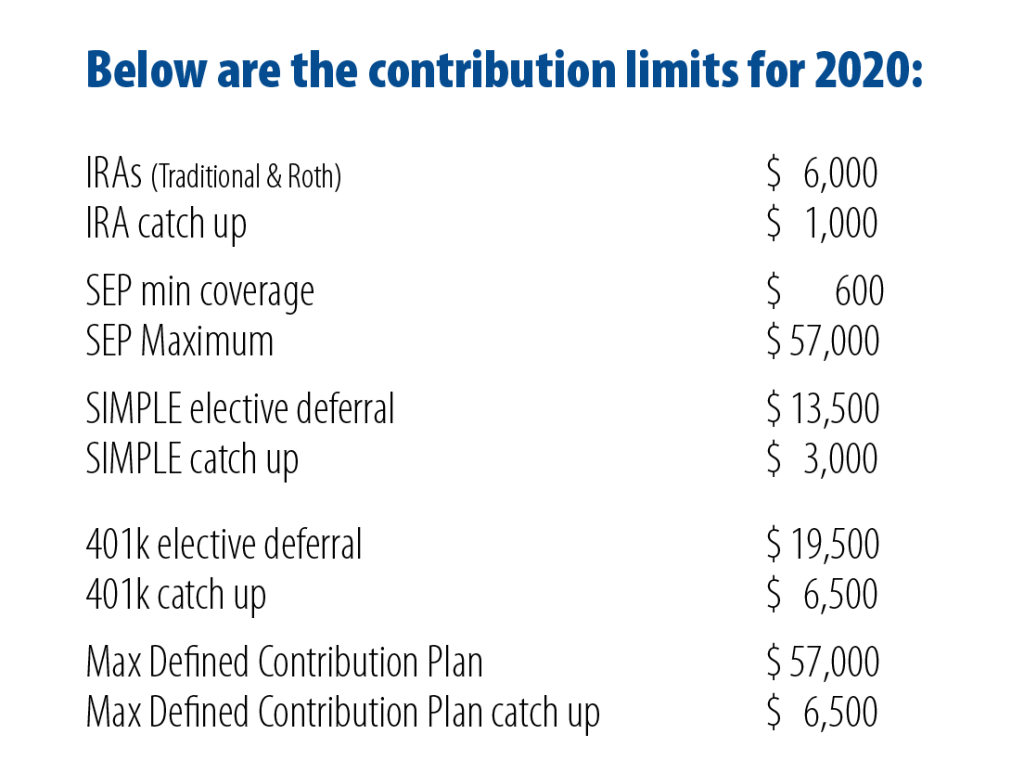

Data extracted from Craigslist can be used for various applications, including market research, trend analysis, and price comparison. Visualizing data through charts and graphs can reveal patterns and insights. For example, a real estate company could use a listcrawler to track rental prices in a specific area over time, identifying trends and making informed decisions about pricing and property investment.

A used car dealer might use a listcrawler to compare prices of similar vehicles across different sellers.

- Market research (e.g., pricing trends, demand analysis)

- Trend analysis (e.g., identifying popular items, seasonal fluctuations)

- Price comparison (e.g., finding the best deals on products or services)

- Competitor analysis (e.g., tracking competitor pricing and inventory)

- Real estate analysis (e.g., identifying rental trends, property values)

Security and Privacy Concerns

Using a Craigslist listcrawler poses security risks, including potential vulnerabilities to unauthorized access and data breaches. Implementing strong security measures is crucial to protect sensitive data. Data anonymization and privacy protection are paramount. Robust security practices include secure storage of extracted data, encryption of sensitive information, and regular security audits.

Examples of security measures include using secure protocols (HTTPS), input validation to prevent injection attacks, and regularly updating libraries to patch known vulnerabilities.

Alternatives to Listcrawlers

Source: cheggcdn.com

Alternatives to listcrawlers include manually collecting data, using Craigslist’s official APIs (if available), or employing third-party data providers that aggregate Craigslist information. Each approach has its advantages and disadvantages in terms of cost, time, data quality, and legality. Manually collecting data is time-consuming, while relying on third-party providers may be expensive and limit control over data access.

| Method | Pros | Cons |

|---|---|---|

| Listcrawler | Automated, large-scale data collection | Legal and ethical concerns, technical complexity |

| Manual Data Collection | Simple, avoids legal issues | Time-consuming, limited scale |

| Third-party Data Providers | Convenient, pre-processed data | Costly, potential data limitations |

| Craigslist API (if available) | Official, legal data access | Limited data availability, rate limits |

Closing Notes

Ultimately, the use of Craigslist listcrawlers presents a double-edged sword. While offering valuable insights and facilitating efficient data analysis, it necessitates a thorough understanding of the legal, ethical, and security implications. Responsible development and deployment, coupled with adherence to Craigslist’s terms of service and best practices for data handling, are paramount to harnessing the power of this technology while mitigating potential risks.

Careful consideration of alternatives and a commitment to ethical data acquisition should guide any undertaking involving Craigslist data scraping.